In an article titled “Italy became the first Western country to ban ChatGPT. Here’s what other countries are doing”, published on April 4, 2023 by CNBC.com, and updated on April 17, 2023, it is reported that Italy has become the first Western country to ban ChatGPT. This claim turns out to be true.

The author of the article is Ryan Browne, Tech Correspondent for CNBC.com, with contributions from Arjun Kharpal, CNBC’s Senior Technology Correspondent.

CNBC is an entertainment and news brand, owned by NBCUniversal, a subsidiary of Comcast Corporation and one of the world leaders in media and entertainment. According to the website of the NBCUniversal company, CNBC is a world leader in business news, which also provides real-time financial market coverage and analysis. Information from similarweb.com shows that the majority of CNBC.com users are in the USA, followed by a smaller percentage of the audience in Canada, the UK, India, Australia and others.

The news network’s article states that the week before the 4th of April “the Italian Data Protection Watchdog ordered OpenAI to temporarily cease processing Italian users’ data amid a probe into a suspected breach of Europe’s strict privacy regulations”. The claim is factual, has a clearly defined subject: new AI technologies and online user data security, and turns out to be true.

CNBC states that the Italian regulator, known as Garante, cited a data breach to OpenAI. According to the official OpenAI website, it is true that, as stated in the CNBC article, some users were allowed to see titles of conversations from other users’ chat history. Furthermore, the Italian Authority was, in fact, concerned about the processing of personal data with the aim to train the AI, as well as the poorly enforced age restrictions and factuality of the information provided by ChatGPT, a verification with the official Garante website shows. OpenAI, which works in partnership with Microsoft, faced the possibility of having to pay a 20 million euro fine (4% of its annual worldwide revenue) in the event that it was unable to rectify the situation within 20 days.

The CNBC article claims Italy isn’t the only country having to deal with the fast developing AI technologies and the effect this has on society. As per the currently analysed article, “other governments are coming up with their own rules for AI, which, whether or not they mention generative AI, will undoubtedly touch on it”. This claim turns out to be correct as, according to a Reuters publication, countries, including Australia, Britain, China, France, Ireland, Spain, the USA as well as EU legislators are taking steps to regulate AI. Only Ireland, the EU and China, however, mention regulating generative AI in particular.

A Harvard Business Review article from 2021 expresses that concerns about digital technology, focused on potential abuse of personal data, have been around for a while. With the development of AI and its many usages, the conversation is evolving into the subject of AI regulations. The first legal framework on AI was proposed by the EU in April of 2021. As AI technologies were developing, this risk-based approach was designed to provide protection from specific challenges AI systems may bring. AI continues to develop, however, and there are still calls for AI regulations, most recently with the CEO of OpenAI, Sam Altman, calling for the US to regulate artificial intelligence. With all of this in mind, it can be concluded that CNBC is correct in stating that it is difficult for governments to keep up with the rapid development of AI.

The news network correctly cites in its article an interview with Sophie Hackford, a futurist and an advisor on the future of food, climate, and agriculture to John Deere & Co. A recent BBC article reports OpenAI CEO Sam Altman’s concerns about AI replacing some jobs in certain fields as well as being used to spread misinformation during elections. It is also expressed that Mr Altman doesn’t avoid addressing the ethical questions around AI. This information shows that CNBC makes a factually true thesis that AI may impact areas such as job security, data privacy, equality and political manipulation through misinformation.

A Reuters publication from April 3, 2023 confirms CNBC’s position that many other governments are in the process of finding ways to regulate generative AI and even considering following in Italy’s footsteps of banning ChatGPT.

Britain

As for actions taken in the UK, CNBC points out that the government does not aim for the restriction of AI such as ChatGPT, but instead has taken a non-statutory approach, proposing measures, urging all companies and regulators in the sector to work with integrity and accountability. This claim must also be checked by providing supporting evidence.

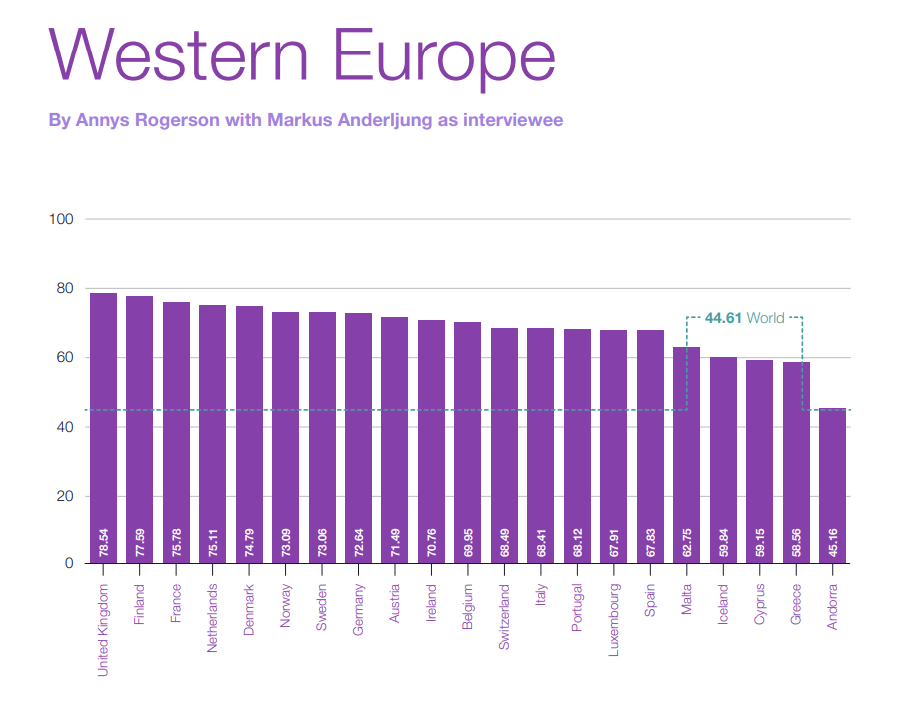

Currently, the UK maintains its position as a leading country in AI development. According to the 2022 yearly AI Government readiness index report produced by Oxford Insights and the IDRC, the United Kingdom received an index of 78.54, ranking first in Western Europe, compared to the world’s average of 44.61. The governments of 194 countries are researched with metrics applied under four main clusters: governance, infrastructure and education, skills and education, and government and public services. Taking all of this into account, with a rapidly growing industry, it makes perfect sense that the UK has decided to go with a more gentle approach for tackling potential AI threats.

A white paper with the title “A pro-innovation approach to AI regulation”, published and presented to Parliament by the secretary of the Department of Science, Innovation & Technology (DCIT) on March 23rd, contains expert analysis on the current regulatory environment and seven key points for action. In the document, Michelle Donelan MP puts emphasis on the speed with which the UK has adopted AI technologies over the last years, making it a leader in AI research and innovations. Moreover, the secretary of DCIT underlines the investments made to fund AI development, with one such example being an investment of £250m to UK Research and innovation to help it in the AI race, made less than a month before the issue of the document. The framework explains the need for regulations for the use of AI by the users and companies, rather than the restriction of the technology itself. It enforces a principles-based approach, which, according to the paper, makes the framework agile and proportionate. The main principles set out in the document are safety, transparency, fairness and accountability.

The white paper also supports CNBC’s claim that the regulations are not aimed specifically at ChatGPT, but at AI tools in general, as it is not mentioned by name neither in writing, nor verbally, as Michelle Donelan MP presented it to the Parliament. Therefore, the author of the CNBC article’s understanding that the UK intends to impose already existing regulations, rather than establish new ones or restrict the technology, is true.

The EU

The article expresses that other European countries will need to put more effort in limiting the implementation of AI than the UK, with the reason for this difference being the new autonomy in digital laws in the UK since leaving the EU. As previously mentioned, Europe takes one of the leading positions in AI regulation, and tech regulation in general for that matter. The bloc has introduced numerous initiatives for risk regulation and one such example is The European AI Alliance, which was launched by the European Commission in 2018 in order to establish public dialogue on the matter as well as trust in AI and Robotics. The Alliance is, in fact, overseeing the public discourse on AI and the development of chatbots such as ChatGPT. A publication by one of the Alliance’s experts Norbert Jastroch with the title “The Chatbot Hype: Implications for Risk Regulation in Artificial Intelligence” states that “The current chatbot hype is supposed to influence the resulting work on the AI act to be finalised this year.”

“The Act” refers to the same legislation discussed in the CNBC publication. It was put out more recently, in 2021, after the EU proposed the first ever regulatory framework on artificial intelligence. The CNBC article gives insights on the European AI Act, highlighting as a main concern and focus of its rules the use of AI in critical infrastructure, education, law enforcement, and the judicial system. These areas of application are considered to be high-risk. The Act initially proposes only minimal transparency obligations on systems such as chatbots or deep fakes (page 3). The author of the article states that this initial draft does not take into account the infinite potential of generative AI. The article cites a Reuters publication about EU industry chief Thierry Brenton in which it is expressed that the bloc’s draft rules consider ChatGPT to be a general purpose AI system, which has high-risk uses such as credit scoring or job candidate selection. This claim, however, is unverifiable and cannot be supported from a primary source. It appears only in other articles on the development of the legislation.

The article includes a checkable citation by Germany’s Federal Commissioner for Data Protection for the Handelsblatt, where he states that it is possible for the country to take the same actions against ChatGPT as Italy. The CNBC article explains that France and Ireland have contacted Italy’s privacy regulators to learn more about their findings in order to develop their own plans for action. The French Minister of Digital Transition Jean-Noël Barrot supported this claim when he gave an interview for La Tribune, released on April 6th. In it he firmly denied the likelihood that France will ban the chatbot, but stated that the national ethics committee is expected to release its updated stance on ChatGPT in a few months.

According to the Irish Examiner, Ireland’s Data Protection chief Helen Dixon explained during a Bloomberg conference that ChatGPT needs to be regulated and the watchdog is still learning how to do that properly. In addition, EuroNews confirms the claim that Ireland is one of the countries which is paying close attention to Italy’s ban, again citing the Ireland Data Protection Commission.

The claim in the CNBC article regarding the decision of Sweden’s data protection authority to not ban ChatGPT can not be traced back to its primary source. However, it also appears in Reuters, Decrypt, and other publications about Italy’s ChatGPT suspension.

U.S.

Regarding the use of AI in the United States in January 2023 the National Institute of Standards and Technology (NIST) published a framework, which supports the article about ChatGPT. The framework includes data from their research and has provided references from their work. It contains information about the AI Risks and Trustworthiness; Effectiveness of the AI Risk Management Framework and is divided into two significant parts describing the Foundational information and Core and Profiles. In the article CNBC states that NIST has put out an Artificial Intelligence Risk Management Framework, which gives companies who use AI guidance and management tools for potential harms. This claim is therefore checkable and reliable.

In the article it is also stated that a non-profit research group has filed a complaint against the Federal Trade Commission to suspend development of OpenAI’s ChatGPT tool and has alleged that it violates the agency’s AI guidelines.

According to the official website of The Center for AI and Digital policy (CAIDP) On March 30th, 2023 has indeed been filed a 46-page complaint with FTC regarding the use of AI and asking for investigation and to cease the development of large language models for commercial purposes. The complaint contains an accusation that OpenAI has violated Section 5 of the FTC Act, which prohibits unfair and deceptive business practices, as well as the agency’s guidance on artificial intelligence products, and calls the latest version of ChatGPT “biased, deceptive, and a risk to privacy and public safety.”

In The Chronology of the CAIDP’s official website the President, Marc Rotenberg and the Senior Research Director, Merve Hickok of the CAIDP have sent a letter to the FTC Commissioners saying that they previously have declared that products of AI should be “transparent, explainable, fair, and empirically sound while fostering accountability.” These publications confirm the claims mentioned in CNBCs’ article.

China

The information described in the article from CNBC states that China has been eager to make sure that their technology giants such as “Baidu”, “Alibaba” and “JD.com” are developing products in line with its strict regulations regarding the “deepfakes”. It is mentioned that their “first-of-its-kind” regulation on altered videos and images has been introduced in March.

According to an article named “Deepfake Technology and Current Legal Status of It”, released by Min Liu and Xijin Zhang, China does not adopt specific legislation on “deepfake” videos and images, but it has standardised and restricted the creation of such. The article also includes restrictions on distribution of forged portraits of people in terms of protecting the citizens’ rights. On the contrary there are no punitive provisions as said in the article for the focus on the obligation of labelling, which makes “the declaration of the provisions more meaningful and its resulting in the absence of legal protection”.

Another statement from the CNBC article regarding the large tech companies mentioned earlier is that they have announced plans for ChatGPT rivals. It has been confirmed and checked from various websites including from their own official sites that the three technology giants “Baidu”, “Alibaba” and “JD.com” have planned and unveiled their new AI chatbots. It’s been mentioned in an article on BBC news on April 11 that “Alibaba”has rolled out its own artificial intelligence “ChatGPT style” product named “Tongyi Qianwen”. It has been described that the product which will be a rival to ChatGPT would “perform a number of tasks including turning conversations in meetings into written notes, writing emails and drafting business proposals”. The other two companies “Baidu”and “JD.com” have shared that their new AI generated products would help improve their work in various ways. These statements were shared by the companies and also support CNBC’s claim about the new development of alternatives to ChatGPT.

Conclusion

We have established the claim that “Italy became the first country to ban ChatGPT” to be true. The information was proven as factual by primary sources and data. Claims about other countries in the CNBC article are also correct and were backed by regulatory frameworks and acts, as well as by statements from national data privacy protection watchdogs.

However, it is worth mentioning that since the release of the article, ChatGPT has been made accessible in Italy once again in late April after implementing changes to its privacy settings. It can be concluded that with the increase of applications of generative AI and the lightning-fast pace of advancement of this technology, the potential risks and threats for users are also growing. Therefore, there are expectations for more developments in ChatGPT’s privacy policy, and for more efforts by countries to actively exert control, be it through laws, frameworks, or suspensions.

RESEARCH | ARTICLE © Victoria Pehlivanova, Kristin Bencheva, Nina Nyagolova,

Sofia University, Bulgaria

Leave your comments, thoughts and suggestions in the box below. Take note: your response is moderated.